- Delete anaconda navigator mac how to#

- Delete anaconda navigator mac install#

- Delete anaconda navigator mac windows#

It is not advised to delete the directory directly where the conda environment is stored. In this case, the command will be: conda env remove -p /path/to/env If you have the path where a conda environment is located, you can directly specify the path instead of name of the conda environment. If the name of the environment to be delete is corrupted_env, then use the following command to delete it: conda env remove -n corrupted_envĪlternatively, we can use the following command: conda remove -name corrupted_env -all Step 3: Delete the Conda Environment (6 commands) To get out of the current environment, use the command: conda deactivate You cannot delete the conda environment you are within. Let the name of the environment to be delete is corrupted_env. To find the name of the environment you want to delete, we can get the list of all Conda environments as follows: conda env list Step 1: Find the Conda environment to delete

Delete anaconda navigator mac install#

This completes PySpark install in Anaconda, validating PySpark, and running in Jupyter notebook & Spyder IDE. Spark = ('').getOrCreate()ĭf = spark.createDataFrame(data).toDF(*columns) Post install, write the below program and run it by pressing F5 or by selecting a run button from the menu. If you don’t have Spyder on Anaconda, just install it by selecting Install option from navigator. You might get a warning for second command “ WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform” warning, ignore that for now.

Run the below commands to make sure the PySpark is working in Jupyter. If you get pyspark error in jupyter then then run the following commands in the notebook cell to find the PySpark. On Jupyter, each cell is a statement, so you can run each cell independently when there are no dependencies on previous cells. Now select New -> PythonX and enter the below lines and select Run. This opens up Jupyter notebook in the default browser. Post-install, Open Jupyter by selecting Launch button. If you don’t have Jupyter notebook installed on Anaconda, just install it by selecting Install option.

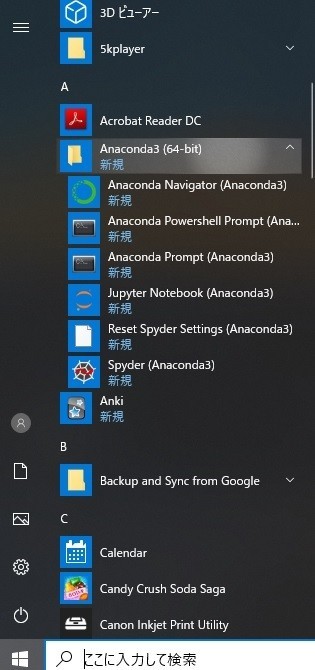

Anaconda Navigator is a UI application where you can control the Anaconda packages, environment e.t.c. and for Mac, you can find it from Finder => Applications or from Launchpad.

Delete anaconda navigator mac windows#

Now open Anaconda Navigator – For windows use the start or by typing Anaconda in search.

Delete anaconda navigator mac how to#

With the last step, PySpark install is completed in Anaconda and validated the installation by launching PySpark shell and running the sample program now, let’s see how to run a similar PySpark example in Jupyter notebook. Now access from your favorite web browser to access Spark Web UI to monitor your jobs. For more examples on PySpark refer to PySpark Tutorial with Examples. Note that SparkSession 'spark' and SparkContext 'sc' is by default available in PySpark shell.ĭata = Enter the following commands in the PySpark shell in the same order. Let’s create a PySpark DataFrame with some sample data to validate the installation.

0 kommentar(er)

0 kommentar(er)